Asymptotic Notations

Asymptotic Analysis of algorithms (asymptotic notations)

Resources for an algorithm are usually expressed as a function regarding input. Often this function is messy and complicated to work. To study Function growth efficiently, we reduce the function down to the important part.

Let f (n) = an2+bn+c

In this function, the n2 term dominates the function that is when n gets sufficiently large.

Dominate terms are what we are interested in reducing a function, in this; we ignore all constants and coefficient and look at the highest order term concerning n.

Asymptotic notation:

The word Asymptotic means approaching a value or curve arbitrarily closely (i.e., as some sort of limit is taken).

Asymptotic analysis

It is a technique of representing limiting behavior. The methodology has the applications across science. It can be used to analyze the performance of an algorithm for some large data set.

1. In computer science in the analysis of algorithms, considering the performance of algorithms when applied to very large input datasets

The simplest example is a function ƒ (n) = n2+3n, the term 3n becomes insignificant compared to n2 when n is very large. The function "ƒ (n) is said to be asymptotically equivalent to n2 as n → ∞", and here is written symbolically as ƒ (n) ~ n2.

Asymptotic notations are used to write fastest and slowest possible running time for an algorithm. These are also referred to as 'best case' and 'worst case' scenarios respectively.

"In asymptotic notations, we derive the complexity concerning the size of the input. (Example in terms of n)"

"These notations are important because without expanding the cost of running the algorithm, we can estimate the complexity of the algorithms."

We have discussed Asymptotic Analysis, and Worst, Average, and Best Cases of Algorithms. The main idea of asymptotic analysis is to have a measure of the efficiency of algorithms that don’t depend on machine-specific constants and don’t require algorithms to be implemented and time taken by programs to be compared. Asymptotic notations are mathematical tools to represent the time complexity of algorithms for asymptotic analysis.

There are mainly three asymptotic notations:

- Big-O Notation (O-notation)

- Omega Notation (Ω-notation)

- Theta Notation (Θ-notation )

There are mainly three asymptotic notations:

- Big-O Notation (O-notation)

- Omega Notation (Ω-notation)

- Theta Notation (Θ-notation)

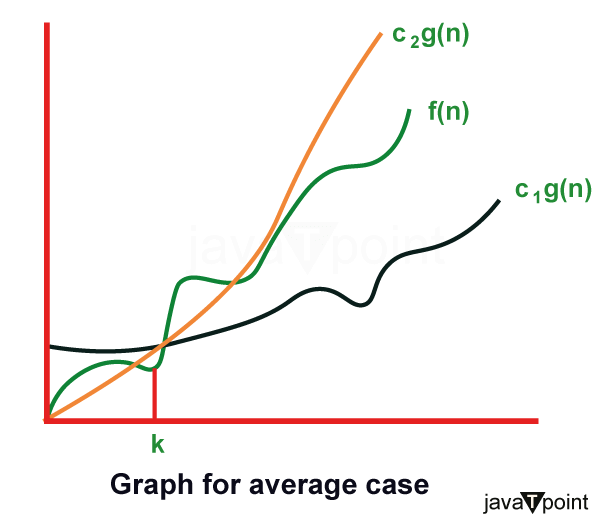

1. Theta Notation (Θ-Notation):

Theta notation encloses the function from above and below. Since it represents the upper and the lower bound of the running time of an algorithm, it is used for analyzing the average-case complexity of an algorithm.

Let g and f be the function from the set of natural numbers to itself. The function f is said to be Θ(g), if there are constants c1, c2 > 0 and a natural number n0 such that c1* g(n) ≤ f(n) ≤ c2 * g(n) for all n ≥ n0

Theta notation

Mathematical Representation of Theta notation:

Θ (g(n)) = {f(n): there exist positive constants c1, c2 and n0 such that 0 ≤ c1 * g(n) ≤ f(n) ≤ c2 * g(n) for all n ≥ n0}

Note: Θ(g) is a set

The above expression can be described as if f(n) is theta of g(n), then the value f(n) is always between c1 * g(n) and c2 * g(n) for large values of n (n ≥ n0). The definition of theta also requires that f(n) must be non-negative for values of n greater than n0.

The execution time serves as both a lower and upper bound on the algorithm’s time complexity.

It exist as both, most, and least boundaries for a given input value.

A simple way to get the Theta notation of an expression is to drop low-order terms and ignore leading constants. For example, Consider the expression 3n3 + 6n2 + 6000 = Θ(n3), the dropping lower order terms is always fine because there will always be a number(n) after which Θ(n3) has higher values than Θ(n2) irrespective of the constants involved. For a given function g(n), we denote Θ(g(n)) is following set of functions. Examples :

{ 100 , log (2000) , 10^4 } belongs to Θ(1)

{ (n/4) , (2n+3) , (n/100 + log(n)) } belongs to Θ(n)

{ (n^2+n) , (2n^2) , (n^2+log(n))} belongs to Θ( n2)Note: Θ provides exact bounds.

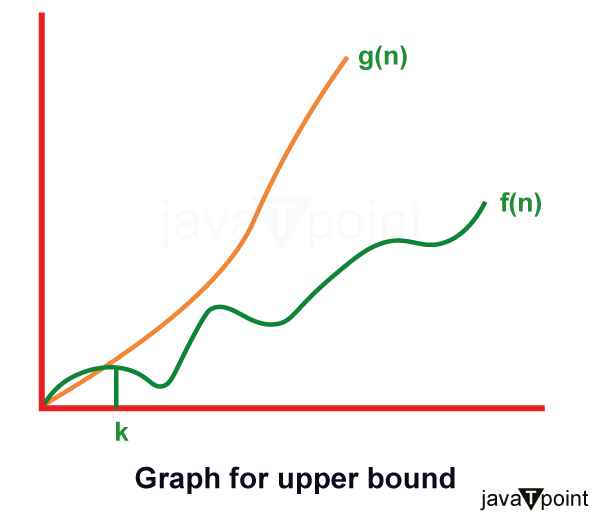

2. Big-O Notation (O-notation):

Big-O notation represents the upper bound of the running time of an algorithm. Therefore, it gives the worst-case complexity of an algorithm.

If f(n) describes the running time of an algorithm, f(n) is O(g(n)) if there exist a positive constant C and n0 such that, 0 ≤ f(n) ≤ cg(n) for all n ≥ n0

It returns the highest possible output value (big-O)for a given input.

The execution time serves as an upper bound on the algorithm’s time complexity.

Mathematical Representation of Big-O Notation:

O(g(n)) = { f(n): there exist positive constants c and n0 such that 0 ≤ f(n) ≤ cg(n) for all n ≥ n0 }

For example, Consider the case of Insertion Sort. It takes linear time in the best case and quadratic time in the worst case. We can safely say that the time complexity of the Insertion sort is O(n2).

Note: O(n2) also covers linear time.

If we use Θ notation to represent the time complexity of Insertion sort, we have to use two statements for best and worst cases:

- The worst-case time complexity of Insertion Sort is Θ(n2).

- The best case time complexity of Insertion Sort is Θ(n).

The Big-O notation is useful when we only have an upper bound on the time complexity of an algorithm. Many times we easily find an upper bound by simply looking at the algorithm.

Examples :

{ 100 , log (2000) , 10^4 } belongs to O(1)

U { (n/4) , (2n+3) , (n/100 + log(n)) } belongs to O(n)

U { (n^2+n) , (2n^2) , (n^2+log(n))} belongs to O( n^2)Note: Here, U represents union, we can write it in these manner because O provides exact or upper bounds .

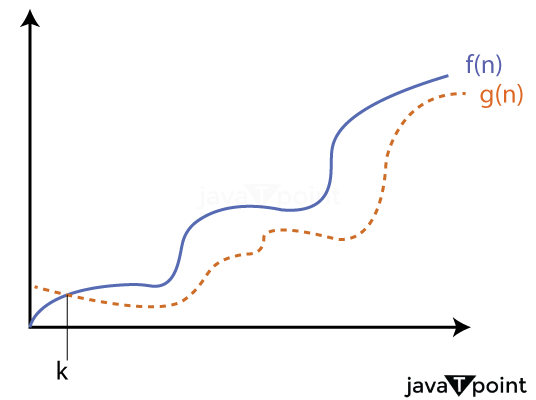

3. Omega Notation (Ω-Notation):

Omega notation represents the lower bound of the running time of an algorithm. Thus, it provides the best case complexity of an algorithm.

The execution time serves as a lower bound on the algorithm’s time complexity.

It is defined as the condition that allows an algorithm to complete statement execution in the shortest amount of time.

Let g and f be the function from the set of natural numbers to itself. The function f is said to be Ω(g), if there is a constant c > 0 and a natural number n0 such that c*g(n) ≤ f(n) for all n ≥ n0

Mathematical Representation of Omega notation :

Ω(g(n)) = { f(n): there exist positive constants c and n0 such that 0 ≤ cg(n) ≤ f(n) for all n ≥ n0 }

Let us consider the same Insertion sort example here. The time complexity of Insertion Sort can be written as Ω(n), but it is not very useful information about insertion sort, as we are generally interested in worst-case and sometimes in the average case.

Examples :

{ (n^2+n) , (2n^2) , (n^2+log(n))} belongs to Ω( n^2)

U { (n/4) , (2n+3) , (n/100 + log(n)) } belongs to Ω(n)

U { 100 , log (2000) , 10^4 } belongs to Ω(1)Note: Here, U represents union, we can write it in these manner because Ω provides exact or lower bounds.

Properties of Asymptotic Notations:

1. General Properties:

If f(n) is O(g(n)) then a*f(n) is also O(g(n)), where a is a constant.

Example:

f(n) = 2n²+5 is O(n²)

then, 7*f(n) = 7(2n²+5) = 14n²+35 is also O(n²).Similarly, this property satisfies both Θ and Ω notation.

We can say,

If f(n) is Θ(g(n)) then a*f(n) is also Θ(g(n)), where a is a constant.

If f(n) is Ω (g(n)) then a*f(n) is also Ω (g(n)), where a is a constant.

2. Transitive Properties:

If f(n) is O(g(n)) and g(n) is O(h(n)) then f(n) = O(h(n)).

Example:

If f(n) = n, g(n) = n² and h(n)=n³

n is O(n²) and n² is O(n³) then, n is O(n³)Similarly, this property satisfies both Θ and Ω notation.

We can say,

If f(n) is Θ(g(n)) and g(n) is Θ(h(n)) then f(n) = Θ(h(n)) .

If f(n) is Ω (g(n)) and g(n) is Ω (h(n)) then f(n) = Ω (h(n))

3. Reflexive Properties:

Reflexive properties are always easy to understand after transitive.

If f(n) is given then f(n) is O(f(n)). Since MAXIMUM VALUE OF f(n) will be f(n) ITSELF!

Hence x = f(n) and y = O(f(n) tie themselves in reflexive relation always.

Example:

f(n) = n² ; O(n²) i.e O(f(n))

Similarly, this property satisfies both Θ and Ω notation.

We can say that,

If f(n) is given then f(n) is Θ(f(n)).

If f(n) is given then f(n) is Ω (f(n)).

4. Symmetric Properties:

If f(n) is Θ(g(n)) then g(n) is Θ(f(n)).

Example:

If(n) = n² and g(n) = n²

then, f(n) = Θ(n²) and g(n) = Θ(n²)This property only satisfies for Θ notation.

5. Transpose Symmetric Properties:

If f(n) is O(g(n)) then g(n) is Ω (f(n)).

Example:

If(n) = n , g(n) = n²

then n is O(n²) and n² is Ω (n)This property only satisfies O and Ω notations.

6. Some More Properties:

1. If f(n) = O(g(n)) and f(n) = Ω(g(n)) then f(n) = Θ(g(n))

2. If f(n) = O(g(n)) and d(n)=O(e(n)) then f(n) + d(n) = O( max( g(n), e(n) ))

Example:

f(n) = n i.e O(n)

d(n) = n² i.e O(n²)

then f(n) + d(n) = n + n² i.e O(n²)

3. If f(n)=O(g(n)) and d(n)=O(e(n)) then f(n) * d(n) = O( g(n) * e(n))

Example:

f(n) = n i.e O(n)

d(n) = n² i.e O(n²)

then f(n) * d(n) = n * n² = n³ i.e O(n³)

Note: If f(n) = O(g(n)) then g(n) = Ω(f(n)) -

Why is Asymptotic Notation Important?

1. They give simple characteristics of an algorithm's efficiency.

2. They allow the comparisons of the performances of various algorithms.

Asymptotic Notations:

Asymptotic Notation is a way of comparing function that ignores constant factors and small input sizes. Three notations are used to calculate the running time complexity of an algorithm:

1. Big-oh notation: Big-oh is the formal method of expressing the upper bound of an algorithm's running time. It is the measure of the longest amount of time. The function f (n) = O (g (n)) [read as "f of n is big-oh of g of n"] if and only if exist positive constant c and such that

Hence, function g (n) is an upper bound for function f (n), as g (n) grows faster than f (n)

For Example:

Hence, the complexity of f(n) can be represented as O (g (n))

2. Omega () Notation: The function f (n) = Ω (g (n)) [read as "f of n is omega of g of n"] if and only if there exists positive constant c and n0 such that

F (n) ≥ k* g (n) for all n, n≥ n0

For Example:

f (n) =8n2+2n-3≥8n2-3

=7n2+(n2-3)≥7n2 (g(n))

Thus, k1=7

Hence, the complexity of f (n) can be represented as Ω (g (n))

3. Theta (θ): The function f (n) = θ (g (n)) [read as "f is the theta of g of n"] if and only if there exists positive constant k1, k2 and k0 such that

k1 * g (n) ≤ f(n)≤ k2 g(n)for all n, n≥ n0

For Example:

3n+2= θ (n) as 3n+2≥3n and 3n+2≤ 4n, for n

k1=3,k2=4, and n0=2

Hence, the complexity of f (n) can be represented as θ (g(n)).

The Theta Notation is more precise than both the big-oh and Omega notation. The function f (n) = θ (g (n)) if g(n) is both an upper and lower bound.

Comments

Post a Comment